Virtual Memory In a Nutshell

If it's there, and you can see it, it's real.

If it's not there, and you can see it, it's virtual.

If it's there, and you can't see it, it's transparent.

If it's not there, and you can't see it, you erased it.

-IBM poster explaining virtual memory, circa 1978

What is Virtual Memory?

The memory management subsystem is one of the most important parts of the operating system. Since the early days of computing, there has been a need for more memory than exists physically in a system. Strategies have been developed to overcome this limitation and the most successful of these is virtual memory. Virtual memory makes the system appear to have more memory than is physically present by sharing it among competing processes as they need it. The VM, more than any other subsystem, affects the overall performance of the operating system.Benefits of Virtual Memory

- Large Address Spaces

- Protection

- Memory Mapping

- Fair Physical Memory Allocation

- Shared Virtual Memory

How does this manifest itself in Linux?

Linux can use either a normal file in the filesystem or a separate partition for swap space. A swap partition is faster, but it is easier to change the size of a swap file (there's no need to repartition the whole hard disk, and possibly install everything from scratch). When you know how much swap space you need, you should go for a swap partition, but if you are uncertain, you can use a swap file first, use the system for a while so that you can get a feel for how much swap you need, and then make a swap partition when you're confident about its size.You should also know that Linux allows one to use several swap partitions and/or swap files at the same time. This means that if you only occasionally need an unusual amount of swap space, you can set up an extra swap file at such times, instead of keeping the whole amount allocated all the time.)

Monitoring Virtual Memory

sort -n /dev/urandom &During sortAlso see vmstat, top, free -m

Mem: 3369572k total, 3242668k used, 126904k free, 128664k buffers

Swap: 2040244k total, 102636k used, 1937608k free, 2921304k cached

Sort cancelled

Mem: 3369572k total, 475692k used, 2893880k free, 121604k buffers

Swap: 2040244k total, 102636k used, 1937608k free, 215344k cached

A demonstration: malloc_max.c

Tuning Virtual Memory

Swappiness is a kernel "knob" (located in /proc/sys/vm/swappiness) used to tweak how much the kernel favors swap over RAM; high swappiness means the kernel will swap out a lot, and low swappiness means the kernel will try not to use swap space.example: echo 0 > /proc/sys/vm/swappiness

Paging and Swapping: An Overview

An excellent review of CPSC 457 and memory management in Linux can be found here.An analogy for paging and swapping.

The Paging Game explains how virtual memory works.

- If you are reading a book, you do not need to have all the pages spread out on a table for you to work effectively just the page you are currently using. I remember many times in college when I had the entire table top covered with open books, including my notebook. As I was studying, I would read a little from one book, take notes on what I read, and, if I needed more details on that subject, I would either go to a different page or a completely different book.

- Just as I only need to have open the pages I am working with currently, a process needs to have only those pages in memory with which it is working.

- Like me, if the process needs a page that is not currently available (not in physical memory), it needs to go get it (usually from the hard disk)

- If another student came along and wanted to use that table, there might be enough space for him or her to spread out his or her books as well. If not, I would have to close some of my books (maybe putting bookmarks at the pages I was using). If another student came along or the table was fairly small, I might have to put some of the books away.

- It is also the kernel's responsibility to ensure that no one process hogs all available memory, just like the librarian telling me to make some space on the table.

The paging system can be divided into two sections. First, the policy algorithm decides which pages to write out to disk and when to write then. Second, the paging mechanism carries out the transfer and pages data back into physical memory when they are needed again.

Linux uses a Least Recently Used (LRU) page aging technique to fairly choose pages which might be removed from the system. This scheme involves every page in the system having an age which changes as the page is accessed. The more that a page is accessed, the younger it is; the less that it is accessed, the older and more stale it becomes. Old pages are good candidates for swapping.

Moving unused parts out to disk used to be done manually by the programmer, back in the early days of computing. Programmers had to expend vast amounts of effort keeping track of what was in memory at a given time, and rolling segments in and out as needed. Older languages like COBOL still contain a large vocabulary of features for expressing this memory overlaying - totally obsolete and inexplicable to the current generation of programmers.

As the processor executes a program it reads an instruction from memory and decodes it. In decoding the instruction, the processor may need to fetch or store the contents of a location in memory. The processor then executes the instruction and moves on to the next instruction in the program. In this way the processor is always accessing memory either to fetch instructions or to fetch and store data.

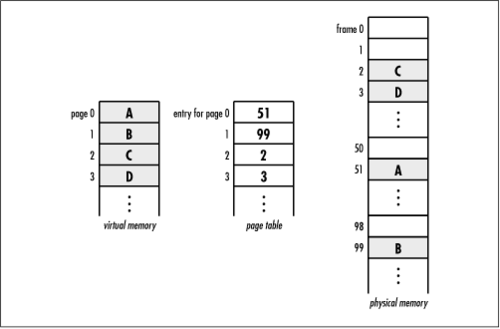

In a virtual memory system all of these addresses are virtual addresses and not physical addresses. These virtual addresses are converted into physical addresses by the processor based on information held in a set of tables maintained by the operating system.

Address translation takes place using a page table and is fast due to dedicated hardware. Each process has its own page table that maps pages of its virtual address space to frames in physical memory. When a process references a particular virtual address, the appropriate entry in its page table is inspected to determine in which physical frame the page resides. When a process references a virtual address not yet in a frame, a page fault occurs and a frame is allocated in physical memory.

References

What is Virtual MemoryThe Official Red Hat Linux System Administration Primer

How the Linux Kernel Manages Virtual Memory

Memory Management

Generating random numbers

The difference among VIRT, RES, and SHR in top output

Expert C Programming: Deep C Secrets

Understanding Virtual Memory

Memory Management in Linux

A Windows user spends 1/3 of his life sleeping, 1/3 working, 1/3 waiting.

The memory manager is a part of the operating system that keeps track of associations between virtual addresses and physical addresses and handles paging. To the memory manager, the page is the basic unit of memory. The Memory Management Unit (MMU), which is a hardware agent, performs the actual translations.

Very broadly, memory management under Linux has two components. The first deals with allocating and freeing physical memory. The second handles virtual memory. This is an overview of the main subsystems.

Picture is described here

Management of Physical Memory

Buddy system: memory allocation and deallocation algorithm that Linux uses.When page frames are allocated and deallocated, the system runs into a memory fragmentation problem called external fragmentation. This occurs when the available page frames are spread out throughout memory in such a way that large amounts of contiguous page frames are not available for allocation although the total number of available page frames is sufficient. That is, the available page frames are interrupted by one or more unavailable page frames, which breaks continuity. There are various approaches to reduce external fragmentation. Linux uses an implementation of a memory management algorithm called the buddy system.

Buddy systems maintain a list of available blocks of memory. Each list will point to blocks of memory of different sizes, but they are all sized in powers of two. The number of lists depends on the implementation. Page frames are allocated from the list of free blocks of the smallest possible size. This maintains larger contiguous block sizes available for the larger requests. When allocated blocks are returned, the buddy system searches the free lists for available blocks of memory that's the same size as the returned block. If any of these available blocks is contiguous to the returned block, they are merged into a block twice the size of each individual. These blocks (the returned block and the available block that is contiguous to it) are called buddies, hence the name "buddy system." This way , the kernel ensures that larger block sizes become available as soon as page frames are freed.

Slab allocator: manages the allocation of memory sizes smaller than a page.

Pages are the basic unit of memory for the memory manager. However, processes generally request memory on the order of bytes, not on the order of pages. To support the allocation of smaller memory requests made through calls to functions like kmalloc(), the kernel implements the slab allocator, which is a layer of the memory manager that acts on acquired pages.

The slab allocator seeks to reduce the cost incurred by allocating, initializing, destroying, and freeing memory areas by maintaining a ready cache of commonly used memory areas. This cache maintains the memory areas allocated, initialized, and ready to deploy. When the requesting process no longer needs the memory areas, they are simply returned to the cache.

If we run the command cat /proc/slabinfo, the existing slab allocator caches are listed.

Management of Virtual Memory

The Linux Virtual Memory Manager is responsible for maintaining address space visible to each process. It creates pages of virtual memory on demand and manages the loading of those pages from disk or their swapping back out to disk as required. In Linux, the manager maintains two separate views of a process's address space:

- as a set of separate regions (logical view)

- as a set of pages.

In the logical view the address space consists of a set of nonoverlapping regions, each region representing a continous, page-aligned subset of the address space. Each region is described internally by a single vm_area_struct structure that defines the properties of the region, including the process's read, write, and execute permissions in the region, and information about any files.

The physical view is stored in the hardware page tables for the process. The page-table entries determine the exact current location of each page of virtual memory.

Virtual memory regions

Several types of virtual memory regions, defined by the backing store for the region. Where did the pages for a region come from? A region backed by nothing represents demand-zero memory. When a process tries to read a page in such a region, it is simply given back a page of memory filled with zeros. Alternatively a region can be backed by a file. Any number of processes can map the same region of the same file, and they will all end up using the same page of physical memory for the purpose.

A region may also be defined by its reaction to writes. The mapping of a region into the process's address space can be either private or shared. If a process writes to a privately mapped region, then the pager detects that a copy-on-write is necessary to keep the changes local to the process. In contrast, writes to a shared region result in updating of the object mapped into that region, so that the change will be visible immediately to any other process that is mapping that object.

Lifetime of a Virtual Address Space

The exec() system call tells the kernel to run a new program within the current process, completely overwriting the current execution context with the initial context of the new program. The process is given a new, completely empty virtual address space. Routines loading the program will populate the address space with virtual memory regions. Creating a new process with fork() involves creating a complete copy of the existing process's vm_area_struct descriptors, then creates a new set of page tables for the child.

Both the child and the parent continue executing with the instruction that follows the call to fork. The child is a copy of the parent. For example, the child gets a copy of the parent's data space, heap, and stack. Note that this is a copy for the child; the parent and the child do not share these portions of memory. The parent and the child share the text segment.

Current implementations don't perform a complete copy of the parent's data, stack, and heap, since a fork is often followed by an exec. Instead, a technique called copy-on-write (COW) is used. These regions are shared by the parent and the child and have their protection changed by the kernel to read-only. If either process tries to modify these regions, the kernel then makes a copy of that piece of memory only, typically a "page" in a virtual memory system.

More Details

You take the blue pill, the story ends. You wake up and whatever you want to believe. You take the red.... you stay in and I show you just how deep the rabbit hole goes....Linux MM Wiki

-Morpheus in the Matrix

See the source code

Understanding the Linux Virtual Memory Manager (html)

Understanding the Linux Virtual Memory Manager (pdf)

Linux Kernel Primer, A Top-Down Approach for x86 and PowerPC Architectures

Process Segments

Operating System Concepts

Obligatory Windows Section

- Computer are like air conditioners: they stop working when you open windows.

- "Microsoft is not the answer. Microsoft is the question. NO is the answer."

- "Windows 95 /n./ 32 bit extensions and a graphical shell for a 16 bit patch to an 8 bit operating system originally coded for a 4 bit microprocessor, written by a 2 bit company that can't stand 1 bit of competition."

- "Mac users swear by their Mac, PC users swear at their PC."

- "Microsoft Works." - Oxymoron

Site last modified: April 15, 2009